Production bugs wreck sprints—you lose time digging into logs and rushing fixes, and debugging in production risks stability and stalls meaningful progress.

Fixing defects post-release can cost up to 100 times more than fixing early-stage defects. As bugs move downstream, they spawn complex dependencies and inflate engineering efforts. Unit tests catch regressions early and cut back emergency patches. While maintaining them takes time, skipping tests leads to brittle, unscalable code.

This guide outlines techniques, best practices, and tools for writing reliable tests that reduce maintenance overhead and support long-term code stability.

What is Automated Unit Testing, and Why Does It Matter?

A unit is a small, testable piece of code, such as a function or method. Automated unit tests check these parts under different scenarios, catching regressions early, simplifying refactoring, and keeping your codebase clean. They run in isolation (with no external dependencies) and deliver quick, reliable feedback.

Unlike manual testing, automated unit tests run continuously and provide instant quality checks. Early detection of bugs reduces debugging time, which is critical in microservices or dependency-heavy projects.

You’ll still use many types of testing (e.g., data-driven, functional, exploratory), but unit testing is foundational for verifying core functionality. Unit testing tools like JUnit or PyTest are frameworks for creating and running tests; actual automation typically comes from integrating them into CI/CD pipelines or test runners.

A visual summary of automated testing advantages: catching bugs early, preventing regressions, and ensuring overall stability

Why does automated unit testing improve code quality?

Automated unit testing forces you to write cleaner, more modular code. When your functions are easy to test, they naturally follow the Single Responsibility Principle (SRP), meaning fewer hidden dependencies and unpredictable side effects. Good tests push you toward better architecture by default. If your code is a nightmare to test, that’s a red flag for deeper design issues.

Additionally, automated tests help maintain a consistent level of quality across the entire codebase by detecting edge cases, concurrency issues, and integration quirks early on. High coverage doesn’t just reduce bugs—it’s a tangible metric of your code’s reliability and maintainability.

How does automated unit testing save developers time and effort?

Automated unit testing catches bugs before they become a problem, saving you from painful rework and production nightmares. Every commit runs tests automatically, flagging issues instantly so you’re not stuck manually debugging later. Instead of firefighting regressions, you can focus on shipping new features.

By validating code in parallel environments (for example, using containerized CI/CD pipelines), teams can test multiple branches simultaneously without stepping on each other’s work. When tests are well-structured—using mocking frameworks, dependency injection, and clear naming—developers quickly pinpoint issues, fix them, and move on. The result is a shorter feedback loop, less context switching, and a smoother path to delivering stable, high-quality releases.

Monitoring code quality metrics alongside these tests catches issues early and preserves a maintainable codebase.

Why is automated unit testing essential for code maintenance?

Code doesn’t stay static. It evolves and grows as teams add features, refactor older sections, or switch out dependencies. Automated unit tests keep the changes in check, making sure nothing accidentally breaks along the way. And if something does go wrong? Tests pinpoint the problem fast, so you’re not digging through a mess of changes to find what broke.

Over the long term, a robust test suite becomes living documentation. New developers can see exactly how each part of the system should behave without digging through outdated manuals. Automated unit testing is necessary if you want stable, maintainable code that can handle growth and changing requirements.

Common Challenges In Automated Unit Testing

Writing effective tests demands time and thoroughness, which can clash with tight release deadlines. Tests must also be updated whenever the code changes; this is an ongoing commitment.

Skipping tests might speed up initial development but backfires in the long term. It results in fragile code that is riskier to modify and debug.

Having many tests doesn’t guarantee coverage of all scenarios. Pair static analysis (catches issues pre-runtime) with runtime monitoring (surfaces real-world failures), and use mutation testing to stress-test your suite. However, automated tests alone aren’t enough—you need a strategic approach that includes reviews, monitoring, best practices, and knowing the difference between vulnerability assessments and penetration testing to ensure a comprehensive security strategy.

Some frameworks flood you with false positives, draining your time and causing you to miss real issues. This overhead can distract from genuine security threats, create workarounds with best security practices (like the ones on GitHub), and burn out engineering teams.

Agentic AI systems like EarlyAI help autonomously manage test maintenance, spot flaky tests, and refine coverage with minimal input. How? They adapt to your evolving codebase, optimizing test suites and reducing manual effort. That means less time fixing tests and more time shipping solid software faster.

A Developer’s Guide to Automated Unit Testing

When automated unit tests are done right, they catch bugs early, prevent regressions, and speed up development. But if you’re not careful, tests can become flaky, slow, or outright useless.

This guide keeps it simple with four key steps to writing solid unit tests that actually help, not hurt. No bloated test suites, no pointless maintenance headaches—just clean, reliable code that won’t break on you.

1: Choose The Right Testing Framework

Choosing the right testing framework is part of effective testing. Python unit testing tools like pytest and unittest provide flexible, reliable options. For Java projects, JUnit provides robust annotations and assertions. If you're working with JavaScript, Jest offers an all-in-one solution with built-in mocking.

Each tool has its strengths, so choosing one that fits your tech stack and testing needs is key. For the rest of the steps, we will be demonstrating pyTest.

2: Write Your First Unit Test

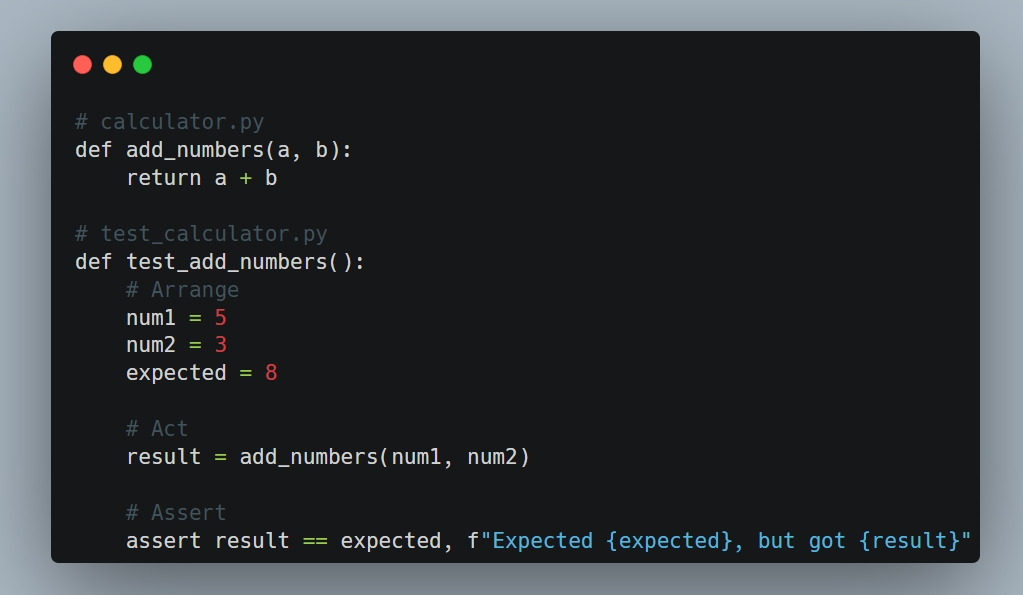

Let's write a simple test using pytest. Here's a basic function that adds two numbers:

A simple Python function and its corresponding test in calculator.py demonstrating automated unit testing for addition.

This test follows the three A's testing types:

- Arrange: Set up your test data

- Act: Call the function you're testing

- Assert: Verify the results match expectations

3: Run And Debug Your Tests

Running your tests is simple—fire them up in the terminal or integrate them into a Continuous Integration (CI) pipeline to catch problems before they become real headaches. With Python, PyTest keeps things effortless with an intuitive command-line interface and detailed test reports that help you debug faster.

For example, in Python with PyTest:

Running automated unit tests from the command line with Pytest for instant feedback on test results.

Common errors:

- AssertionError: Expected vs. actual mismatch

- SyntaxError: Invalid code

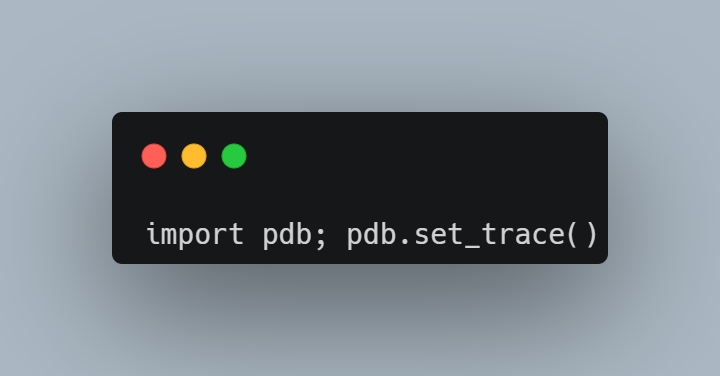

Debugging tip: Insert import pdb; pdb.set_trace() before a failing test to pause execution and inspect variables step by step.

Example usage of the Python pdb debugger (pdb.set_trace()) to inspect and step through test execution.

Doing so will pause execution and allow you to inspect variables step by step.

4: Organize Your Test Files

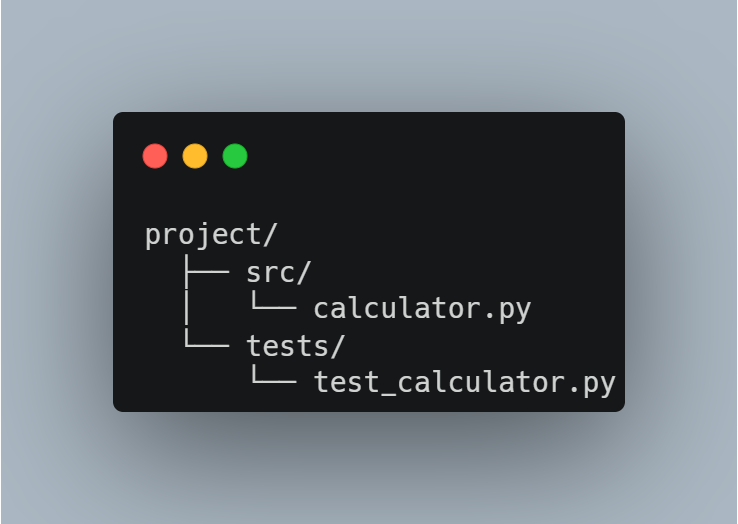

To organize your test files, match the structure of your source code to the structure of your test files. It will make finding, updating, and maintaining tests easier as your project grows.

For example:

A straightforward project layout separating source code (src) from tests (tests) to keep automated tests organized.

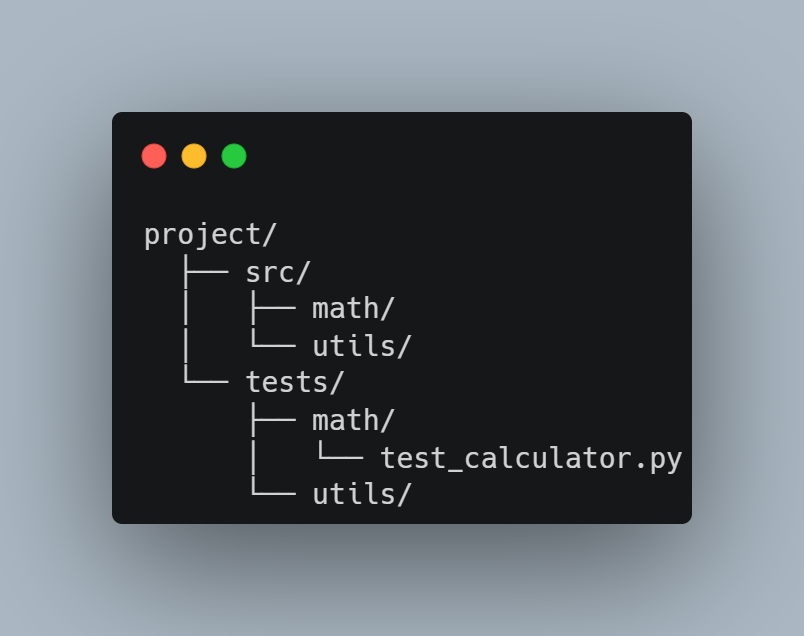

For larger projects, group-related tests.

An expanded Python project structure showcasing dedicated subdirectories for math and utility tests.

Common pitfalls when tests fail:

- Edge cases: Are they covered?

- Dependencies: Installed or mocked properly?

- Isolation: Each test must be independent.

- Security concerns: Have you included a vulnerability assessment to catch potential risks?

Good tests are readable, reliable, and maintainable so that you can refactor with confidence instead of fear.

Best Practices and Automation for Automated Unit Testing

Efficient automated unit testing isn’t just about writing a few checks and calling it a day. It involves strategic naming, proper isolation, test parameterization, coverage analysis, and leveraging automation tools to reduce manual overhead. Below are some best practices that help developers ship code confidently.

Use Specific and Intent-Focused Names

- Why it matters: You’ll revisit code in six months and wonder what a test named test_login() checks.

- How to do it: Go beyond generic naming by including details about the conditions you’re testing. For example, test_user_can_log_in_with_valid_credentials() clarifies that you’re verifying a successful login path using valid data.

Opinion: Short test names may be quick to type but annoying to interpret later. Meaningful names save your future self (and teammates) from a debugging nightmare.

Simulate External Dependencies for True Unit Testing

- Mock vs. Stub:

- Mocks track the usage of external services (such as calling a database or API), ensuring your code calls them correctly.

- Stubs provide hardcoded responses, letting you control the scenario (e.g., “Database returns a user object”).

- Language-Specific Tools:

- Python: unittest.mock or pytest-mock

- JavaScript: jest.mock()

- Java: Mockito

Opinion: If your unit test calls live services, it’s an integration test. Keep unit tests purely local. Mocks and stubs will spare you from network flakiness and slow test runs.

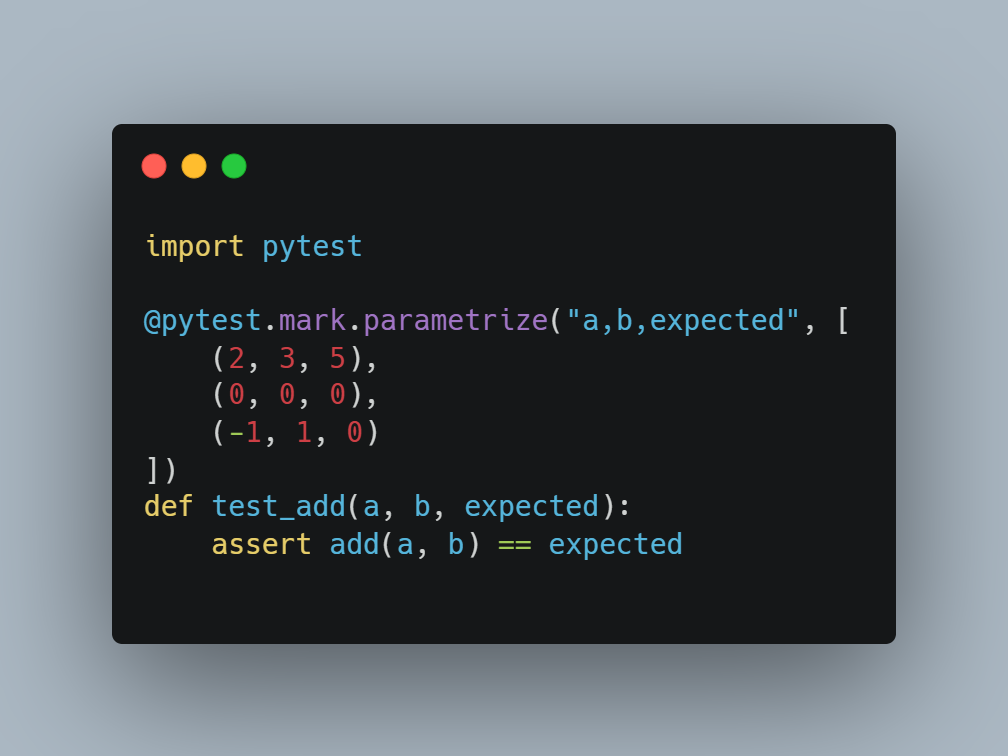

Minimize Redundancy with Parameterized Tests

- Why: You're wasting time if you’re copy-pasting the same test for different inputs. Parameterized tests let you run multiple variations within one test function.

- How: In Python (PyTest), use @pytest.mark.parametrize to feed multiple input/output pairs:

Pytest’s @pytest.mark.parametrize runs a single test function with multiple input-output pairs.

Opinion: Parameterizing is more than a convenience; it encourages you to handle corner cases. If you can’t easily parametrize your tests, your function may be too complex.

Measure and Target Critical Areas

- Coverage Tools:

- coverage.py (Python)

- Istanbul (JavaScript)

- JaCoCo (Java)

- Interpreting Metrics: 100% coverage doesn’t mean 100% bug-free. Aim to cover critical business logic first.

- Opinion: Don’t chase arbitrary coverage percentages. Focus on the functionality that can break the app. Coverage is a guide, not a goal.

Accelerate and Offload Repetitive Tasks

Automated tests strengthen software security by detecting regressions early. Combined with proactive security measures, they help mitigate risks like remote code execution before they become critical vulnerabilities.

AI-Powered Test Generation: Agents like EarlyAI parse your code and auto-generate tests. It spares you from writing boilerplate logic.

Maintenance & Adaptation: As your codebase evolves, AI tools update or suggest updates to existing tests so you don’t chase false failures.

AI can handle the grunt work, but humans need to oversee it. Blind trust in generated tests can lead to missing critical edge cases or domain-specific nuances. Here’s how AI agents improve unit testing.

- Auto-generate meaningful test cases: EarlyAI analyzes your codebase, understands its structure, and automatically generates relevant unit tests.

- Reduce manual boilerplate work: There is no need to write repetitive test scaffolding—EarlyAI takes care of it, freeing up valuable development time.

- Seamless CI/CD integration: AI-powered automation ensures tests are continuously updated and executed as part of your development pipeline.

- Adapt dynamically to code changes: Unlike traditional test automation, EarlyAI learns from your code’s evolution, keeping tests up to date without manual intervention.

EarlyAI in action.

Automated Unit Testing Is Your Workflow’s Next Step Forward

Manual tests can work in the short term but don’t scale.

Yes, manual testing can handle smaller projects, but it quickly becomes a bottleneck as your application expands. More features mean more edge cases, and manual checks can’t keep up. That’s why automated testing is a core part of any serious development process—consistent coverage, quicker feedback loops, and fewer release-day surprises.

Still, setting up and maintaining a comprehensive test suite can be tedious and unnecessary work. That’s where AI-driven agents like EarlyAI make the most significant impact by giving you the space and time to focus on the fun stuff like building features, refining user experiences, and pushing the product forward.

Ready to up your testing game? Try EarlyAI for free and see how it streamlines your workflow.